Digital teams are under more pressure than ever. They must create more content across more channels, often in real time, while still meeting higher expectations for governance, trust and quality. This balancing act has led to a growing reliance on AI. What started as a novelty tool for experimentation is now a necessity woven directly into the workflow.

At Brightspot Illuminate, three experts — Ravi Singh (President and Chief Product Officer), Lucy Collins (Director of Customer Engagement and Product Delivery) and Paul Easterbrooks (Director of Applied AI Engineering) — explored how AI is changing every stage of the content lifecycle. Their message was clear: when used responsibly, AI can help organizations achieve speed and scale without losing control of their brand voice.

Every AI action in our platform is tagged, logged and risk-scored, so governance and observability are built in from the ground up.

AI as ambient intelligence

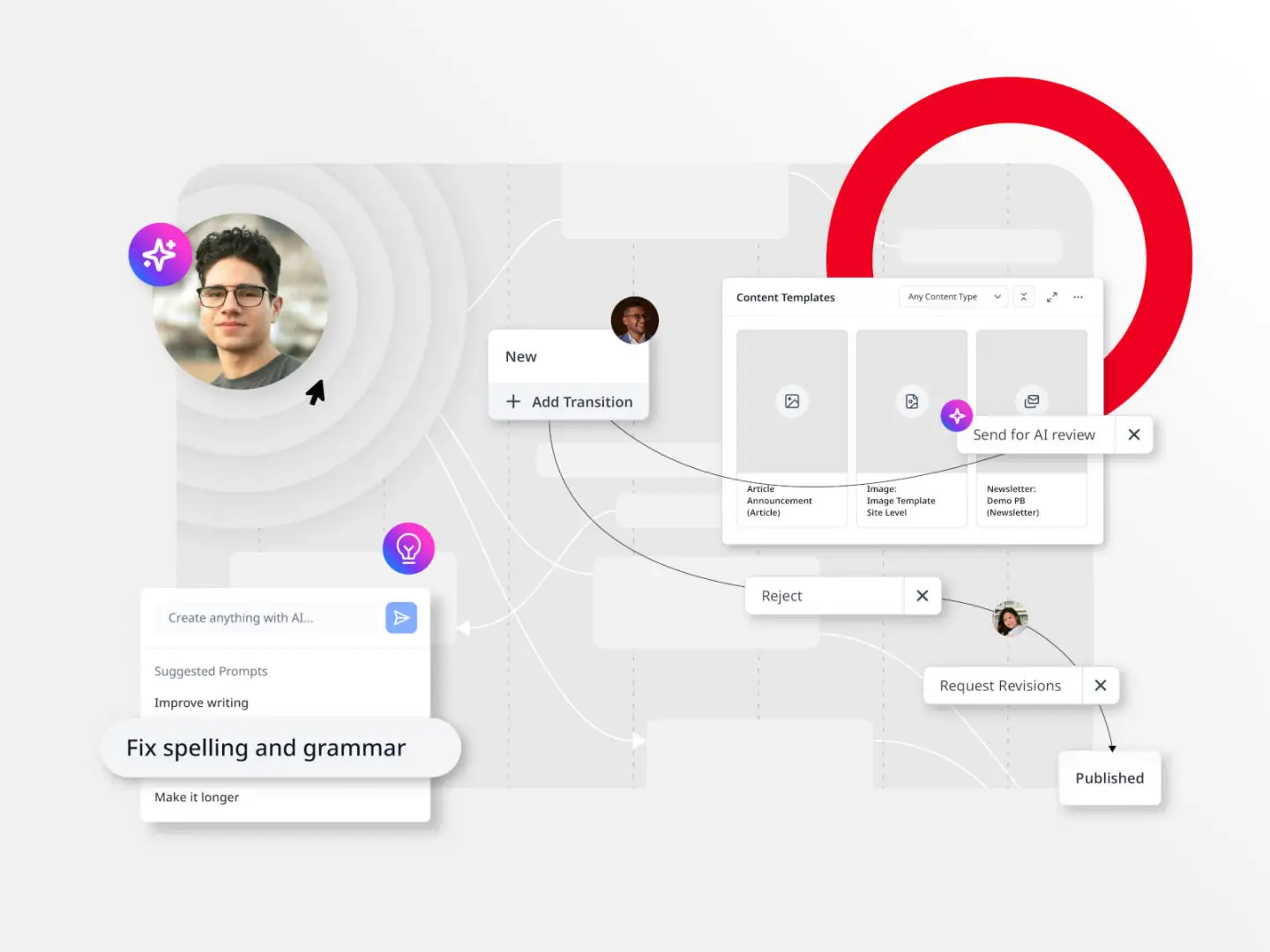

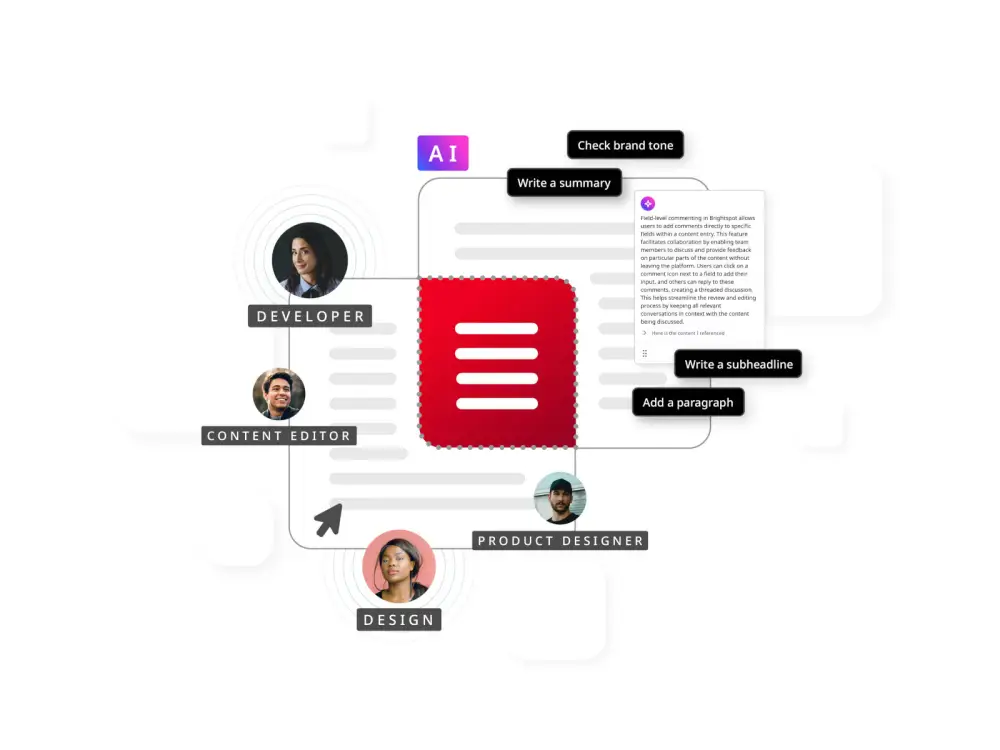

For Singh, AI is not just a set of features or a standalone tool. He describes it as “ambient intelligence” — a constant presence inside workflows, plugins and publishing actions. Instead of requiring teams to jump between platforms, AI sits natively inside the CMS so creators can brainstorm, draft, test and ship content without breaking focus.

This idea of ambient intelligence reflects a shift in how digital teams work. When AI is embedded in everyday tasks, it reduces context switching and removes friction. Singh explained it this way: “AI is enmeshed in everything we do. It enhances the process, not complicates it.” The result is not only efficiency but also a smoother experience for creators who want to spend more time on creative thinking and less on repetitive steps.

Human first, not AI first

While efficiency is valuable, Collins emphasized that AI must remain in a supporting role. “Human-first means human-led,” she said. AI can accelerate tasks like research, first drafts and analysis, but it should never replace the qualities that only people bring — judgment, empathy and originality.

Collins also warned of the risks of unclear governance. If employees cannot see where AI is being used, they cannot manage it effectively. This lack of transparency can lead to uneven quality, blind spots in review processes and, in some cases, erosion of trust with audiences. By contrast, teams that are upfront about how AI contributes to content can build confidence both internally and externally.

She noted that organizations must invest in training. It is not enough to simply provide AI tools — teams need to understand how to use them responsibly and how to identify common “AI tells” such as generic openings or robotic transitions. This skill set will soon become as essential as writing or editing.

AI should be embedded where you already work; we don’t want to introduce new tools just for the sake of it.

Build trust with governance

Easterbrooks focused on the safeguards that keep AI outputs reliable and trustworthy. “Eighty percent of outputs may be fine but the last 20 percent needs human review,” he said. That final review step is where brand safety, accuracy and tone are confirmed before content reaches audiences.

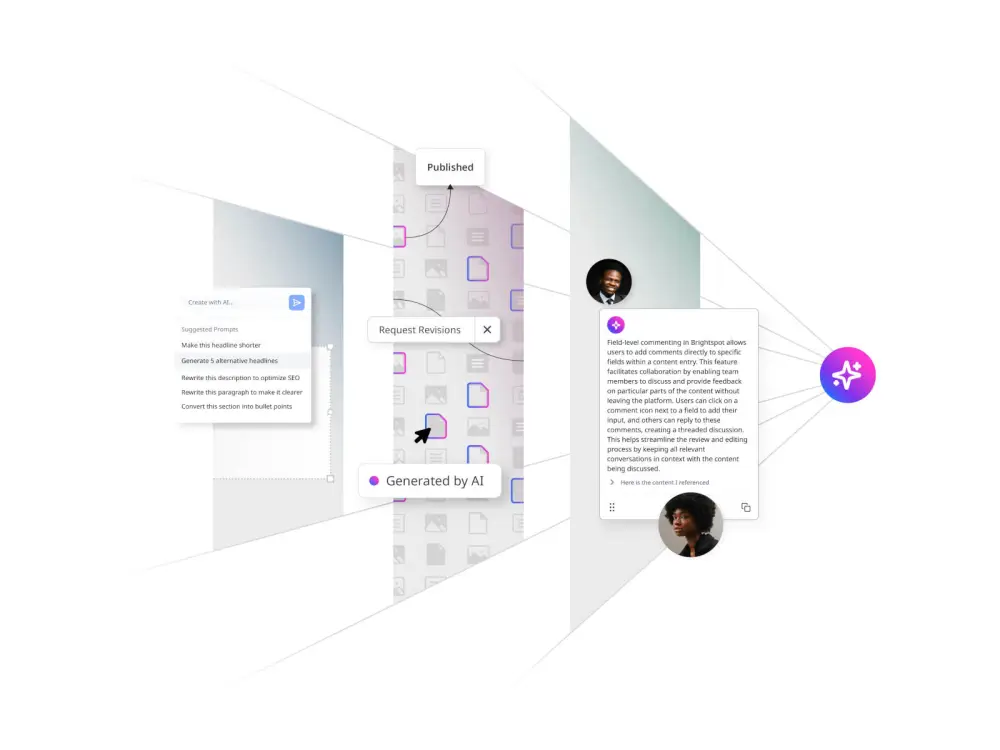

Brightspot supports this with detailed oversight tools. Every AI action can be tagged, logged and risk-scored so editors know exactly where automation played a role. Permissions determine who can generate or approve AI outputs, and guardrails protect against issues like exposure of PII, harmful content or brand safety risks.

This governance layer transforms AI from a risky shortcut into a trusted partner. Teams can experiment and innovate knowing that every step of the process is traceable and accountable.

From research to creation to delivery

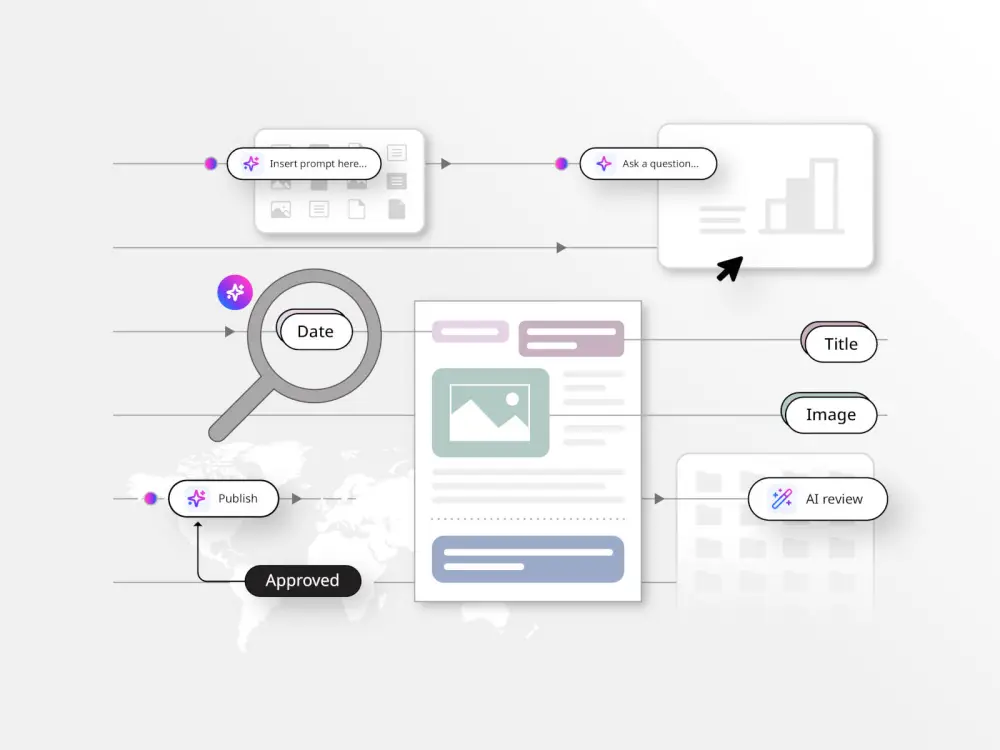

Brightspot’s approach to AI mirrors the full lifecycle of content creation:

- Ask AI turns the CMS into a research assistant. Authors can pull insights, find related work or generate ideas without leaving the edit screen, which speeds up the earliest stages of content development.

- Create with AI embeds drafting and rewriting tools into forms and the rich-text editor. Writers can use it to polish sentences, adjust tone or generate variations that align with brand voice and author personas.

- AI governance provides transparency, showing exactly where AI contributed and flagging risks for editors and admins. This ensures compliance without slowing teams down.

- Automated workflows handle the logistical side of publishing. Tasks such as translation, tagging or asset generation are triggered by rules and roles, reducing manual errors and helping teams move faster.

Together, these capabilities create a seamless system where AI is not an add-on but a fully integrated partner in the publishing process.

Use AI with integrity

All three experts returned to the same principle: AI should support creativity, not replace it. Singh pointed to experimentation and orchestration inside the CMS as the best way to achieve speed at scale. Collins urged teams to make AI’s role visible so people know what they are reviewing and approving. Easterbrooks emphasized monitoring adoption to limit shadow AI — tools used without oversight — and to keep everything above board.

The big takeaway is simple. Treat AI as a system for scale, governance and creativity. Keep humans firmly in control, make AI’s contributions transparent and tune the tools to your brand voice. That is how digital teams can move faster without losing what makes their content distinct.