At Brightspot, our project teams work daily to design, develop, test and deploy exciting features for our customers. These efforts range from preparing for project launches to adding new features tied to major events on customer sites, such as a breaking news story or a sporting occasion.

While our teams collaborate with customers to ensure their solutions look great and function flawlessly, Brightspot’s engineering team focuses on meeting traffic and performance expectations. As we like to say, the site should not only look great but also perform just as well.

A good load test can mean the difference between a smooth site rollout or event and an overloaded site that fails to scale during real-time traffic surges. The last thing anyone wants is to see a screen like this:

Poor end-user experiences translate very quickly to unhappy customers and an escalation to our on-call DevOps team to scale up the environment and rapidly help resolve issues that we see through our performance monitoring tools. But with social media, news quickly gets out about negative experiences and can be fatal to brand reputations, not to mention customer relationships.

As our CIO David Habib has noted in previous posts about dealing with high-traffic events, the key to businesses like ours is always being prepared and having mitigation plans in place for all eventualities.

For example, in 2024 our team was asked to conduct a number of load tests prior to major site launches plus large events that were expected to drive a high amount of traffic over a period of time. As you’d expect, many of our larger load tests focused on sports events like the 2024 Summer Olympics or the U.S. presidential elections coverage in November 2024.

For these efforts, our infrastructure team collaborated closely with project teams, breaking the process into five distinct phases. Load testing is a team-wide effort, involving members from all areas of the project. As with any DevOps initiative, careful planning and scheduling are critical to ensuring success.

Here are a few examples of the types of load tests we typically conduct for Brightspot project teams:

Front-end traffic load testing

Front-end load testing is our most requested test for project teams. This test simulates both cached and uncached traffic hitting the environment simultaneously. The results provide critical insights, including:

- Verifying that DevOps settings are applied correctly.

- Experimenting with configurations to ensure optimal performance in production.

- Confirming proper traffic flow through the solution, such as cloud storage containers and content delivery networks.

- Confidently answering, “How much traffic can our site handle?”

- Ensuring the site scales dynamically with changing traffic patterns throughout the day.

- Identifying any pre-scaling needs before high-traffic events.

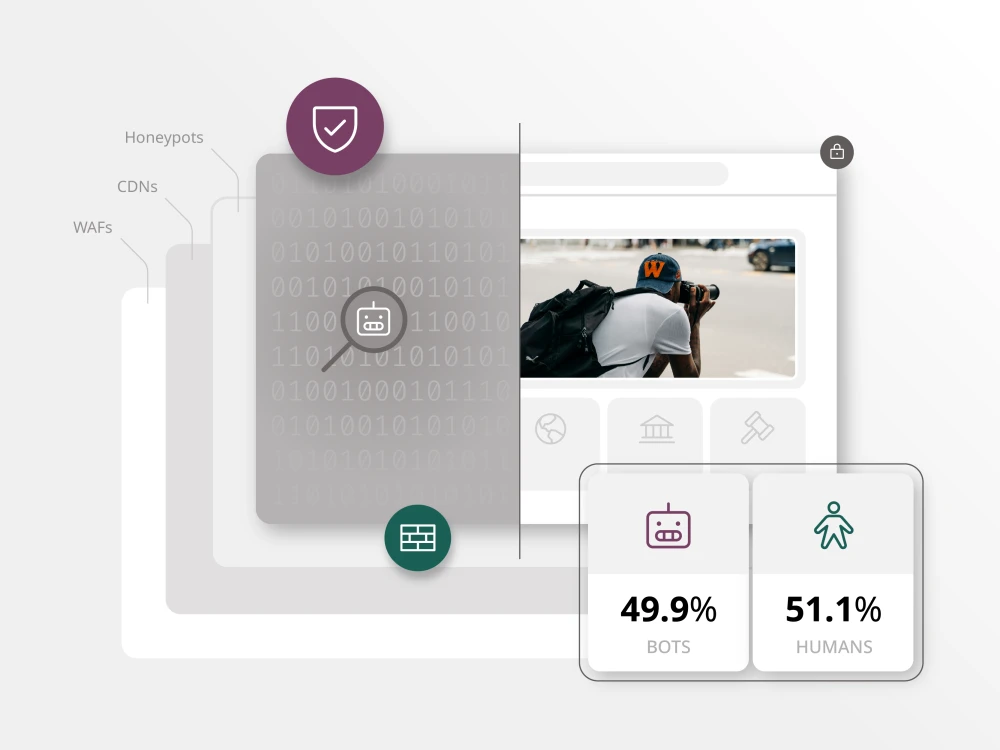

- Validating security configurations and monitoring to handle both legitimate and malicious traffic effectively.

Mobile traffic load testing

Simulating mobile app traffic requires accounting for every aspect of GraphQL requests. Tools like Postman help us:

- Collaborate with development teams to build and test a request library.

- Validate and script requests to match expected weight levels.

- Compare simulated traffic models with real production traffic to ensure accuracy.

GraphQL testing

For headless CMS customers, testing GraphQL requests is crucial. We provide the following metrics to project teams:

- Individual request response times.

- Request format validation against baselines.

- Response times under expected load.

- Error rates at varying load levels.

- Success rates at different traffic levels.

- Environment scalability as request volume increases.

Final combined load testing

After completing individual tests, we conduct a final series of combined load tests to:

- Validate the solution’s performance under or above expected production levels, focusing on error rates and response times.

- Identify necessary configuration or code adjustments.

- Assess how much beyond expected traffic levels the environment can scale.

In addition to these baseline tests, we conduct custom load tests tailored to a project team’s estimated traffic patterns and specific needs.

Burst testing

Our most common load test is a burst test, which simulates a sudden spike in traffic — such as from a trending article on Facebook or another social media platform — causing a rapid increase in page requests.

Key focus areas during burst testing:

- Warmup period: Ensures the environment isn’t starting from a cold state.

- Traffic simulation: Rapidly ramps up traffic, targeting single URLs and key pages (e.g., the homepage or trending modules), hitting both cached and uncached versions of the site.

- Scale-down period: Confirms the site can gracefully return to normal traffic levels.

- Data insights: Analyzes errors, cache hit rates, responsiveness and scalability.

- User experience feedback: Collects feedback from the project team to assess the on-page user experience during the test.

What are the five phases of a load test?

When a project team identifies the need for a load test, our engineers will break down the effort into five phases:

Phase 1: Learning/research

The initial phase consists of working with the project team and the customer to understand:

- What areas of the solution we want to focus our load test traffic against.

Using industry-standard tools like j-Meter, we are able to run tests against all aspects of a project, such as:

- Front-end experience

- CMS back end

- GraphQL framework

- APIs

- Mobile apps

Expected customer traffic patterns based on data in reporting tools like Google Analytics and Datadog, including:

- Current daily traffic

- Expected average hourly traffic

- Expected peak traffic

- Any special events that may cause a burst of traffic

- Number of daily users

- Number of CMS contributors

- High-profile pages that drive the biggest share of site traffic, such as the homepage, live blogs or event landing pages.

- Type of requests and format of page requests.

- Initial environment configuration, focusing on technical aspects like “time to live” (TTL), number of pods, scale ratio, size and cache configuration.

- Geographic origin of traffic. For example, if traffic is evenly distributed in the U.S., page and server load tests should originate from similarly located servers.

- Goals and concerns for the load test. What are we hoping to achieve as a result of the test?

Phase 2: Planning

Once the learning phase is complete, a test plan is developed to ensure a well-run and organized load test experience. This ensures the team knows exactly what the upcoming test will consist of and what details need to be finalized during the scripting process.

During this planning phase, the team works to answer questions like:

- In which environment should we conduct the test?

- Do we need any updates or configuration changes to the environment before conducting the tests?

- In what regions should we run the test?

- Based on expected levels of traffic, what size test bot/engine do we need to use? (Here, we need to balance performance and cost.)

- When can we conduct the test?

- Who needs to be notified before starting the test?

Duration of the test, including:

- How long the warmup period should be.

- How large of a spike and what type of traffic we want to simulate.

- How long the cooldown period should run before starting the next test. (This allows us to confirm that scaling returns to normal as traffic decreases.)

- What type of load test we want to conduct. For example, a burst test is one of the most common tests we conduct for teams in today’s world of social media shares and breaking news.

Phase 3: Scripting

During this phase of the load test, we work closely with development and operations teams to:

- Ensure the proper request formats and parameters.

- Discuss the weight of each request based on the expected number of requests production is likely to receive during a big event.

- Determine the expected cached versus non-cached ratio.

- Determine the volume of mobile requests versus web requests.

- Ensure that the script passes with low levels of traffic and matches the traffic patterns of the production environment, if available.

Phase 4: Conducting the tests

Before running the tests, we schedule a dedicated block of time and notify the operations, project and customer teams about the planned testing activities. Tests are conducted from multiple regions based on the details outlined in the test plan.

While the tests are running, a team of engineers monitors them in real time to track metrics using tools like Datadog and JMeter.

Once the tests are completed, a test report is generated that includes the results of the test and outlines any next steps or investigations needed, if applicable.

5. Final review and reporting

Once the last test is conducted, the team generates a final report comparing the actual test performance to the tests outlined in the test plan.

As part of this review, we capture:

- Any production environment changes to make prior to launch.

- Error and response rates for each request tested (including a chart showing all requests, error rates and response times).

- Lessons learned and improvements for the next series of tests.

- Any Jira bug reports or future tasks the team needs to follow up on.