By Maria Bishirjian, Paul Easterbrooks and Tom Neff

Many content leaders have felt it: the gap between what AI promises and what it actually delivers in day-to-day operations. You’ve probably experimented with ChatGPT or other AI tools, but when it comes to the real work of creating content for multiple channels, updating older content or keeping knowledge bases current, AI still feels disconnected from where the work actually happens.

That’s starting to change.

What’s the real difference between AI chatbots and agentic AI?

The industry is moving toward “agentic AI,” systems that don’t just respond to prompts but can take action across multiple steps and systems. Instead of asking AI to write a social post, you describe an outcome: “Find our best-performing articles from Q4 and create a social campaign around similar themes.” The AI figures out the intermediate steps, queries multiple systems and executes the work. With the introduction of model context protocol, this is no longer hypothetical.

What is Model Context Protocol, or MCP?

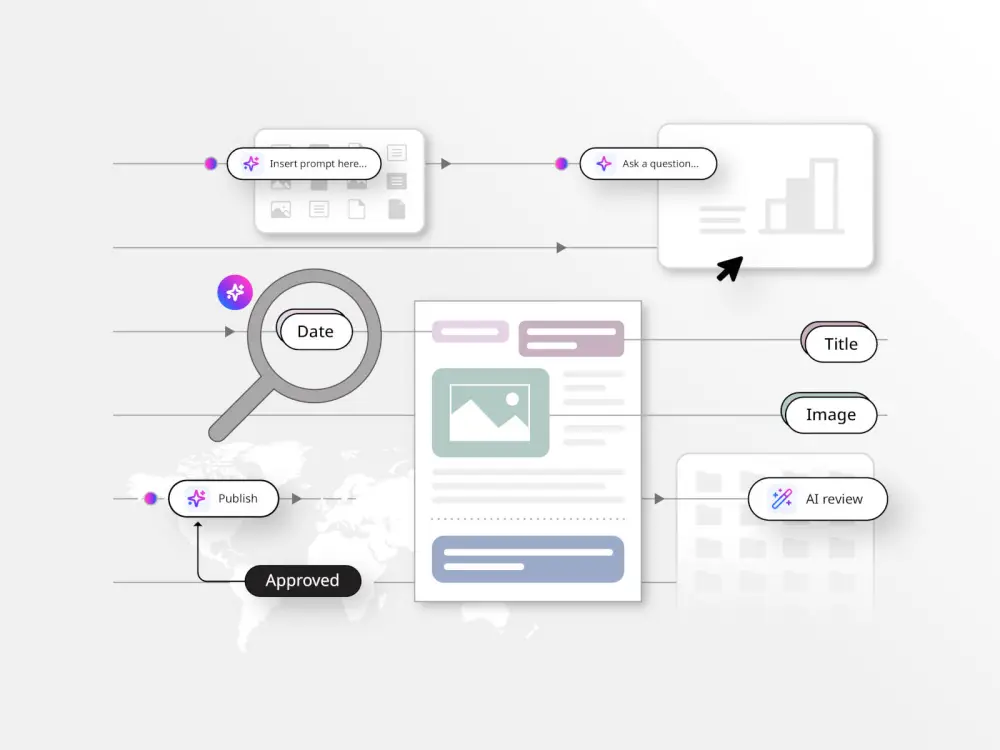

The Model Context Protocol (MCP), an open framework originally developed by Anthropic, has emerged as the connective tissue making this possible. MCP provides a standardized way for AI models to discover what actions are available in a connected system, understand the context needed to use them and execute operations through a consistent interface. Think of it as a common language that lets AI agents interact with your CMS, analytics platform, project management tools and other systems without requiring custom integrations for each combination.

How does MCP architecture work?

The architecture is straightforward: an MCP server exposes your system’s capabilities (such as in a CMS, querying content, updating records, triggering workflows) through machine-readable definitions. AI agents connect to the server, discover what’s available and make structured requests to perform actions. The server handles the translation between what the AI asks for and what your system actually does.

The implications for content operations are significant.

What this looks like in practice

The value of AI that can actually participate in content operations becomes clear when you look at some real-world tasks that consume disproportionate time relative to their complexity.

- The image metadata backlog. Most content teams have thousands of images with missing or incomplete alt text. Everyone knows it matters for accessibility and SEO. No one has time to fix it systematically. An AI agent connected to your CMS can locate images from the past year missing alt text, analyze each image, generate alt text descriptions and update the records, flagging anything that needs human review. What would take weeks of tedious work becomes a supervised process that runs in the background.

- The focus keyword gap. Focus keywords are foundational for SEO and increasingly for generative engine optimization, yet they’re often forgotten in the rush to publish or neglected entirely on older content. An agent can scan your content library for articles missing focus keywords, analyze each piece to determine the appropriate keyword, apply them in batches for human review, and republish. You set the parameters (date range, content types, review batch size) and the system handles the execution.

- Keeping documentation current. In large organizations, knowledge management content drifts out of date constantly. New products launch, policies change and documentation that was accurate six months ago now requires another update. An AI agent can perform broad content analysis to identify gaps and outdated information in ways that traditional search cannot. When a new software release ships, an agent can read the release notes, connect to your issue tracking system to pull ticket details, identify all related documentation in your CMS and draft updates for each affected article. What previously required a human to act as coordinator across multiple systems becomes an automated workflow with human review at the end.

The governance question

As AI gains an active role in content operations, the question of control becomes urgent. Who’s accountable when an agent makes changes across hundreds of assets? How do you maintain brand integrity when AI is operating at scale?

Most of the industry conversation around MCP has focused on capability and connectivity: what AI can do, how to set up servers, security checklists for developers. Less attention has been paid to the operational reality of deploying AI in environments where mistakes have consequences and where “move fast and break things” isn’t an acceptable approach.

Organizations that can deploy AI confidently, knowing exactly what it’s doing and maintaining appropriate oversight, will be able to move faster at scale and mitigate risk.

Most importantly, AI should operate within your existing permission model. If a human editor doesn’t have rights to publish without approval, neither should an AI agent acting on their behalf. The same roles and restrictions that govern human users should constrain automated processes.

What we’re building, safely

At Brightspot, we’ve approached MCP implementation with a specific perspective: the value of AI agents in content operations depends entirely on whether you can trust them to operate safely within your organization’s rules.

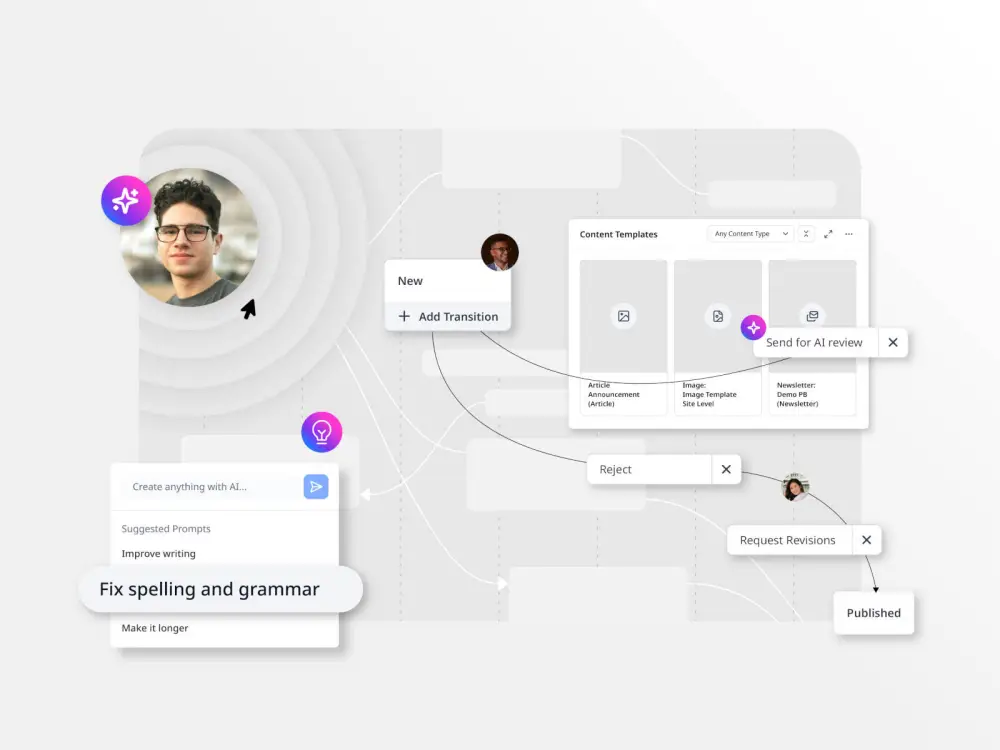

Our MCP server isn’t a third-party add-on. Brightspot is embedding agent orchestration at the platform level, which means that AI agents can be constrained by the same permissions, roles and rules that apply to human users. This ensures agents operate within existing editorial, brand and compliance boundaries. AI agents can then interact with Brightspot the way a human user would: creating content, updating metadata, moving items through workflows. An AI operating on behalf of a junior editor gets that editor’s access rights, not elevated permissions, and can be restricted to specific content types, workflow actions or specific sites within a multisite environment.

We believe this is essential in order for agents to behave responsibly. Security for AI agents should work the same way it works for human users: defined by administrators through familiar role-based permissions.

We’re also introducing automation capabilities that run parallel to human workflows rather than replacing them. The goal isn’t to remove humans from the loop. It’s to remove the repetitive clicking and manual steps that slow teams down while preserving human judgment where it matters. Automations can be triggered by events (content reaching a workflow stage, an article being published) or by schedule, but they exist alongside your editorial workflows rather than inside them. Human processes stay human. Machine processes stay visible and controllable.

Where this is heading

The window for treating AI as an experimental add-on has closed. MCP servers are becoming expected infrastructure.

The differentiation will come from implementation philosophy. While some approaches prioritize speed, we think the organizations that gain lasting advantage will be those that can deploy AI confidently because they trust their systems to behave predictably. That means AI that works within existing processes, respects established permission models, and keeps humans in control of the decisions that matter.

Ultimately, the best approach is to deliver AI capabilities that content teams can actually use and trust.

Most AI tools today work in isolation. You write a prompt, get a response and then manually move that output into your CMS or workflow. Agentic AI is different because it can interact directly with your content management system, performing tasks like creating, updating and routing content within your existing editorial processes rather than outside of them.

MCP stands for Model Context Protocol. It’s a standardized framework that allows AI models to communicate with applications. For content teams, it means an AI assistant can connect to your CMS and work with your actual content rather than operating in a vacuum. It is giving AI the ability to see and act on what’s in your system, not just generate new text from scratch.

Brightspot works with leading AI models out of the box, so if your team just wants to get started quickly, the setup is straightforward. But if you have a preferred LLM provider or run a private model, Brightspot’s flexible architecture lets you bring your own.

Yes. Because MCP is a standardized protocol, AI agents can communicate with multiple MCP-enabled systems simultaneously. For example, an agent could pull performance data from an analytics platform and then use that insight to inform content creation within Brightspot.